TUMTraf Dataset: Multi-sensor datasets for mobility research

High-resolution datasets

With a constellation of 7 sensor stations equipped with more than 60 state-of-the-art and multi-modal sensors, and with a road network coverage of approximately 3.5 kilometers, the TUMTraf Dataset offers mobility researchers, industry partners, public authorities and policy makers high-resolution curated datasets created by capturing real-world traffic along freeways, country roads and urban traffic intersections. The datasets contain on the one hand labeled, time-synchronized and anonymized multi-modal sensor data covering area-scanning cameras, doppler radars, lidars and event-triggered cameras for a variety of traffic and weather-related scenarios, and on the other hand abstract digital twins of the traffic objects with position and trajectory-related information.

Current Releases and Registration

The TUMTraf Dataset is available for download upon registration. Please REGISTER HERE to receive the download links. The complete set currently includes the following major releases containing more than 50 GB of data.

| Dataset | Description |

|---|---|

| R4 | The V2X Cooperative Perception Dataset. |

| R3 | Spatiotemporal synchronized event-based and RGB camera data set. |

| R2 | Four road scenarios of a busy intersection under different weather conditions with data from two cameras and two LiDARs. |

| R1 | 3 different traffic scenarios from the autobahn. |

| R0 | Multiple sets of randomly selected camera and lidar sequences from the autobahn. |

Release notes

March 2024: In the R4 release TUMTraf V2X, a perception dataset, for the cooperative 3D object detection and tracking task is introduced. Our dataset contains 2,000 labeled point clouds and 5,000 labeled images from five roadside and four onboard sensors. It includes 30k 3D boxes with track IDs and precise GPS and IMU data. We labeled eight categories and covered occlusion scenarios with challenging driving maneuvers, like traffic violations, near-miss events, overtaking, and U-turns.

January 2024: In the R3 release TUMTraf Event, synchronized image material between an Event-Based and an RGB camera, as well as a combined representation, is published. The dataset contains over 50,496 2D bounding boxes in various traffic scenarios during the day and at night. TUMTraf Event enables, among other things, the research into data fusion of both sensor systems using a stationary intelligent infrastructure.

June 2023: In the R2 release TUMTraf Intersection, the focus is on traffic at a busy intersection, which we captured using labeled LiDAR point clouds and synchronized camera images. The annotations are available as 3D bounding boxes with track IDs. This release includes 4,800 camera images and 4,800 point clouds, which are annotated with a total of over 57,400 3D bounding boxes. Here, the dataset includes objects from ten classes that perform complex driving maneuvers, such as left and right turns, overtaking maneuvers, or U-turns. In addition to the dataset, we also provide the calibration data of the individual sensors so that data fusion is possible. Furthermore, we would like to refer to our TUMTraf-Devkit, which makes the processing of the data much easier.

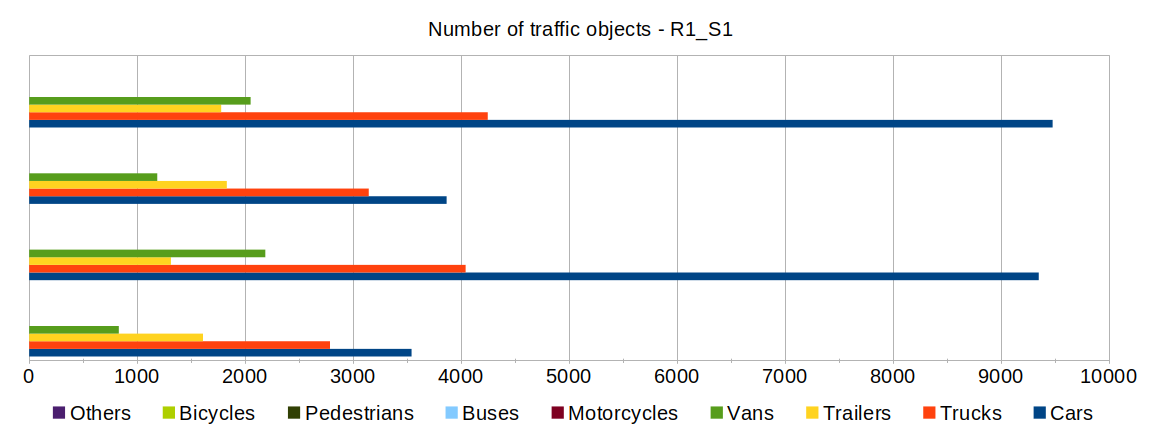

May 2022: The R1 release TUMTraf A9 Highway Extended comes with time-synchronized multi-sensor data recorded for 3 different traffic scenarios from the A9 autobahn. They include labeled ground truth data for a couple of typical extreme weather situations that usually occur on the autobahn during winter. Heavy snow combined with strong gusts of wind and dense fog have always posed challenges for vision-based driving assistance and automation systems. With this release, we offer researchers and engineers a new set of extreme weather ground truth data for the development of robust and weather-proof vision-based systems. The R1 release now also includes an extended version of the high-speed crash incident on the autobahn which was made available as part of the previous release. The time periods before and after the crash can now be investigated in more detail with this extended version.

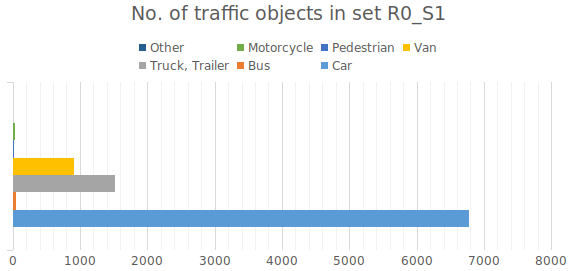

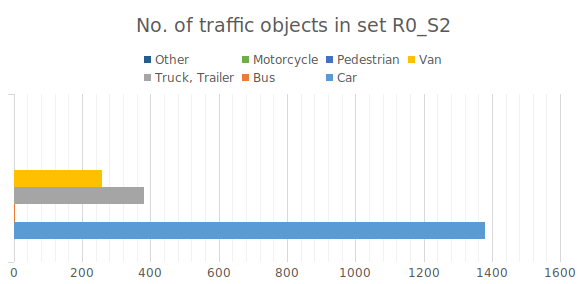

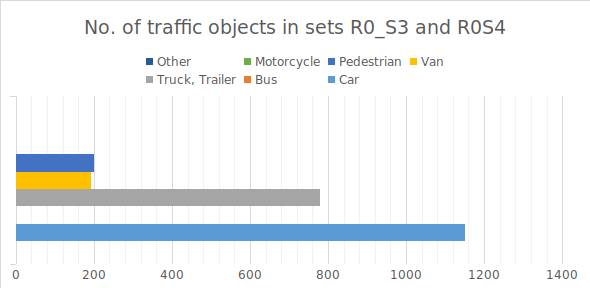

March 2022: The R0 release TUMTraf A9 Highway contains labeled multi-sensor data with a mix of random and sequential traffic scenarios from the A9 autobahn. The data from this set can be used as ground truth for the development and verification of AI-based detectors, tracking, and fusion algorithms, and to understand and analyze the occurrence and the after-effects of a typical high-speed crash incident on the autobahn.

More details regarding TUMTraf dev-kit can be found at https://github.com/tum-traffic-dataset/tum-traffic-dataset-dev-kit.

Roadmap

The upcoming releases will contain amidst others, digital twins with information about trajectory and position, new traffic scenarios, longer sequences, and new locations including an urban traffic intersection. Keep watching this space for more.

Citation

- Release 4: TUMTraf V2X Cooperative Perception Dataset

@inproceedings{zimmer2024tumtrafv2x,

title={TUMTraf V2X Cooperative Perception Dataset},

author={Zimmer, Walter and Wardana, Gerhard Arya and Sritharan, Suren and Zhou,

Xingcheng and Song, Rui and Knoll, Alois C.},

booktitle={2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR},

year={2024},

organization={IEEE}

}

- Release 3: TUMTraf Event Dataset

@article{cress2024tumtrafevent,

title={TUMTraf Event: Calibration and Fusion Resulting in a Dataset for Roadside Event-Based and RGB Cameras},

author={Cre{\ss}, Christian and Zimmer, Walter and Purschke, Nils and Doan, Bach Ngoc and Kirchner, Sven

and Lakshminarasimhan, Venkatnarayanan and Strand, Leah and Knoll, Alois C},

journal={arXiv preprint},

url={https://arxiv.org/abs/2401.08474},

year={2024}

}

- Release 2: TUMTraf Intersection Dataset [IEEE Best Student Paper Award]

@inproceedings{zimmer2023tumtrafintersection,

title={TUMTraf Intersection Dataset: All You Need for Urban 3D Camera-LiDAR Roadside Perception},

author={Zimmer, Walter and Cre{\ss}, Christian and Nguyen, Huu Tung and Knoll, Alois C},

booktitle={2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC)},

pages={1030--1037},

year={2023},

organization={IEEE}

}

- Release 1: TUMTraf A9 Highway Dataset

@inproceedings{cress2022tumtrafa9,

title={A9-dataset: Multi-sensor infrastructure-based dataset for mobility research},

author={Cre{\ss}, Christian and Zimmer, Walter and Strand, Leah and Fortkord, Maximilian

and Dai, Siyi and Lakshminarasimhan, Venkatnarayanan and Knoll, Alois},

booktitle={2022 IEEE Intelligent Vehicles Symposium (IV)},

pages={965--970},

year={2022},

organization={IEEE}

}

We request users to cite this paper in your work.

Release R4 – V2X Cooperative Perception Dataset

| The TUMTraf-V2X Dataset contains ten different sub sets (scenarios): S01 - S10. In total, 2,000 labeled point clouds and 5,000 labeled images from five roadside and four onboard sensors were labeled. It includes 30k labeled 3D boxes with track IDs and precise GPS and IMU data. We labeled eight object categories and covered occlusion scenarios with challenging driving maneuvers, like traffic violations, near-miss events, overtaking, and U-turns during day and night time. |

| R4_S1 | In this scenario, the ego vehicle is occluded by two busses and two large trucks. The roadside sensors enhance the perception range, making traffic participants behind the buses visible. |

| R4_S2 | This sequence with 3,273 3D boxes shows multiple occlusion scenarios. In one scenario, a truck is occluding multiple pedestrians. |

| R4_S3 | In this drive, a bus is occluding a car which the roadside sensors can perceive. |

| R4_S4 | In this drive, many vehicles are performing a U-turn maneuver and occlude some pedestrians waiting at a red traffic light. The pedestrians are within the field of view of the roadside sensors and can be perceived. |

| R4_S5 | In this drive, multiple trucks and trailers occlude traffic participants. These traffic participants are visible from the elevated roadside cameras and LiDAR mounted on the infrastructure. |

| R4_S6 | In this scenario, a truck is occluding multiple objects that can be perceived by the roadside camera and LiDAR. |

| R4_S7 | In this example, a motorcyclist is overtaking the ego vehicle that gives way to pedestrians crossing the road. |

| R4_S8 | This night scene contains a traffic violation and is the largest sequence in the dataset. A pedestrian runs the red light after a fast-moving vehicle has crossed the intersection. |

| R4_S9 | In this scenario the ego vehicle is braking to avoid pedestrians. |

| R4_S10 | In this night drive, the ego vehicle is performing a U-turn. |

Release R3 – Spatiotemporal synchronized event-based and RGB camera dataset

| The TUMTraf Event Dataset contains synchronized images from an Event-Based and an RGB camera, as well as a combined representation with 50,496 2D bounding boxes. The dataset considers different traffic scenarios during the day and at night. TUMTraf Event enables, among other things, the research of the data fusion of both sensor systems using a stationary intelligent infrastructure. In this regard, our work allowed us to combine the strengths of both sensor systems while compensating for the weaknesses simultaneously. Therefore, our fusion led to a noticeable reduction in false positive detections of the RGB camera and, thus, increased detection performance. The results are shown in the video below: |

Release R2 – Complex traffic scenarios at a busy intersection

| R2_S1 and R2_S2 | These sequences each contain 30 seconds of traffic events at a busy intersection, which were recorded and synchronized with two LiDARs and two cameras. The recordings were made during the day. The objects are labeled with 3D bounding boxes and unique track IDs. This enables continuous object tracking and data fusion. |

| R2_S3 | In contrast to R2_S1 and R2_S2, this sequence contains contiguous 120 seconds of traffic events at a road intersection, which were also recorded by 2 LiDARs and 2 cameras. The recordings were made during the day. The objects are labeled with 3D bounding boxes and unique track IDs, which allow continuous object tracking and data fusion. |

| R2_S4 | This sequence also contains a 30-second recording of the traffic event, which was created on a rainy night. Like the other datasets, the objects are also marked with 3D bounding boxes and unique track IDs. |

Release R1 - Traffic Scenarios from the Autobahn

| R1_S1 | This set contains a 30s long multi-sensor sequence recorded in winter under heavy snow conditions. The data consists of time-synchronized images recorded at 10fps from 4 cameras observing a 400m long test stretch from multiple perspectives. The traffic objects are labelled with 3D bounding boxes and unique ids within each sensor frame to enable subsequent object-tracking and data-fusion. |

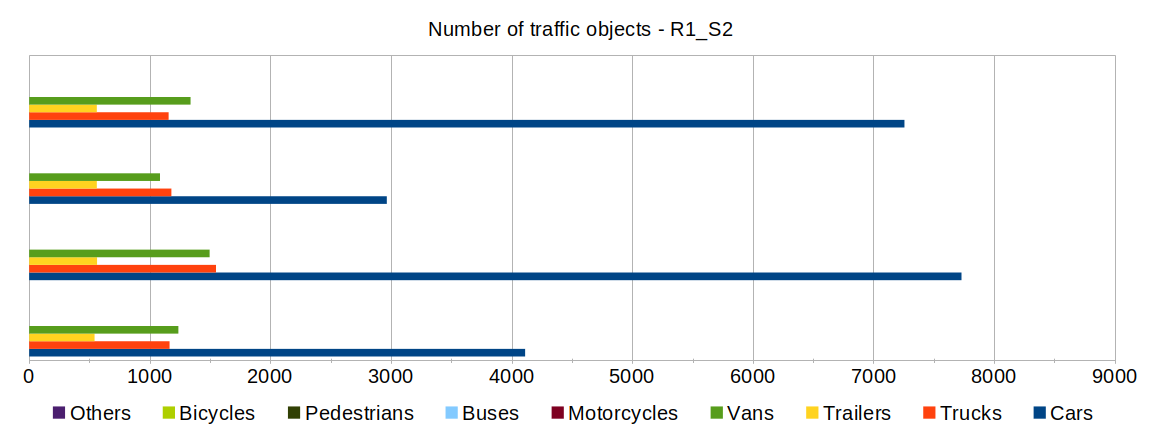

| R1_S2 | This set contains a 30s long multi-sensor sequence recorded during heavy fog conditions. |

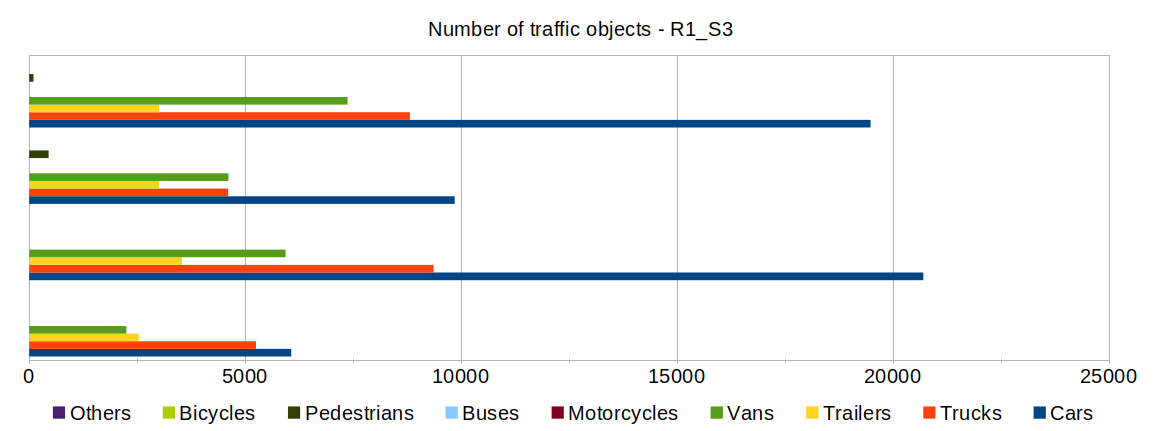

| R1_S3 | This set contains a 60s long multi-sensor sequence covering the time period before, during and after the occurrence of a traffic accident. |

| R1_S1 | R1_S2 | R1_S3 |

|---|---|---|

|

|

|